ImagePullBackoff

Hello all.

I need some help on this problem.

So, I'm using Ubuntu 16.04 and have one master and one worker.

My master node ip is 192.168.0.35

My worker node ip is 192.168.0.36

Both have static bridge enp0s3 connection, gateway 192.168.0.1, dns 8.8.8.8.

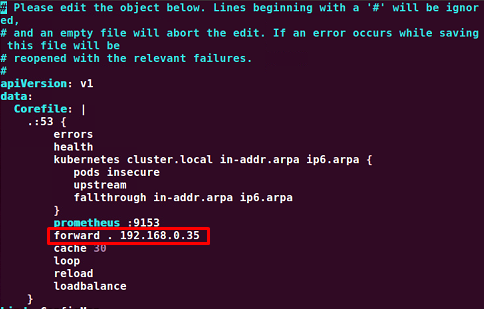

I changed my coredns pod configMap before.

There was an error which ImagePullBackoff status when i tried to pull image from docker to make a pod, but this only happened on my master node. On my worker node, all worked fine, no error and got running status. But this matter will be solved when i restart the master node, may it one times or more until all pods were running. After restart one times or more, i got running status on all pods.

Anybody know why did this happen? Is there a problem with my network configurations?

Comments

-

Hello,

If you can share the error and the command you ran to generate it, it will help with the troubleshooting.

Changing the network configuration after the cluster has been initialized is tricky at best. I note you are using an IP in the 192.168.0.0 range, which is the default range used by Calico to provide IP addresses to the pods. This can cause network issues.

To troubleshoot I would look at the IP of the master node when this problem occurs. Then use docker run to see if I can pull and run the container outside of Kubernetes. This will narrow down where to look for the issue.

I think a better solution is to rebuild the cluster and either change the range of IPs of the nodes, or edit the calico.yaml file and the kubeadm init command to use a range without overlap. If you reference the lab exercise you'll note there are steps exactly about this issue and where to find the settings in the calico.yaml file and then the kubeadm init command to avoid the issue of having overlapping IPs.

Regards,

0 -

Several master node restarts forced your pods to receive new IP addresses, probably until there was no more overlap in IPs with your nodes' IPs. In multi-node clusters, on the first node, the calico network plugin will assign IPs to pods from the 192.168.0.X subnet, on the second node the plugin will assign IPs from 192.168.1.X, on a potential third node from 192.168.2.X, ... - provided your calico was started with its default configuration. Keeping this in mind, I would see how IPs of pods running on the master node and the IPs of your nodes would overlap, considering both ranges are on 192.168.0.X, causing DNS confusion.

Regards,

-Chris0 -

I will make it more details.

Master node IP 192.168.0.35/24, hostname master

Worker node IP 192.168.0.36/24, hostname ubuntu

Both are static IPs, bridge enp0s3, gateway 192.168.0.1, dns 8.8.8.8

Calico is using the default, which is 192.168.0.0/16, i did not change anything

Also, master node and worker node both has same docker0 bridge IP which is 172.17.0.1/16

Actually, i'm wondering is it okay if both of my nodes have same docker0 with same IP?This is my cluster info

This is my /etc/hosts on master

This is my /etc/hosts on worker

When i ran docker run on my worker, it worked fine. But when i ran docker run on my master, i got this error

The command i ran before i got the error are

- kubectl apply -n sock-shop -f complete-demo.yaml

- kubectl get pods -n sock-shop -o wide

This is the pod that got the error

1. payment-7d4d4bf9hb4-hgbrx

2. queue-master-6b5b5c7658-rr5gb

Do you reference about Exercise 3.1 from number 8 until number 10?

Am i having Network Issues because of how i configured my network?0 -

Hi @neirkate,

Thank you for all the details provided. There seem to be several issues related to a misconfigured cluster. As mentioned earlier the default pods IP range 192.168.0.0/16, used by calico and the kubeadm init command, overlap the node IPs configured by your hypervisor. To fix this issue the suggestion was to rebuild the cluster and use different IP ranges: either change the IPs assigned to your nodes by the hypervisor or change the calico.yaml file and issue kubeadm init command with a different IP range for pods.

There also seems to be some naming confusion between the ubuntu node and worker node. It seems that pods are scheduled on a node named ubuntu when they should be running on the worker.

I agree with the suggestion of starting clean from 2 new nodes, and re-install all the components and re-create the cluster, by making sure there is no overlapping between nodes' IP range and pods' IP range. Both nodes should have the same type of networking interface, with promiscuous mode set to allow-all traffic.Good luck,

-Chris0 -

@chrispokorni

Sorry for the late reply.

I just knew it that i had configured my cluster wrongly, especially the network part.

Okay, i will do that. Seems like that is the best solution for this problem.

Thank you for your help.Have a nice day.

0

Categories

- All Categories

- 161 LFX Mentorship

- 161 LFX Mentorship: Linux Kernel

- 890 Linux Foundation IT Professional Programs

- 396 Cloud Engineer IT Professional Program

- 195 Advanced Cloud Engineer IT Professional Program

- 102 DevOps IT Professional Program

- 1 DevOps & GitOps IT Professional Program

- 165 Cloud Native Developer IT Professional Program

- 158 Express Training Courses & Microlearning

- 155 Express Courses - Discussion Forum

- 3 Microlearning - Discussion Forum

- 7.4K Training Courses

- 50 LFC110 Class Forum - Discontinued

- 74 LFC131 Class Forum - DISCONTINUED

- 60 LFD102 Class Forum

- 276 LFD103 Class Forum

- 1 LFD103-JP クラス フォーラム

- 31 LFD110 Class Forum

- LFD114 Class Forum

- 55 LFD121 Class Forum

- 3 LFD123 Class Forum

- 1 LFD125 Class Forum

- 19 LFD133 Class Forum

- 10 LFD134 Class Forum

- 19 LFD137 Class Forum

- 1 LFD140 Class Forum

- 73 LFD201 Class Forum

- 8 LFD210 Class Forum

- 6 LFD210-CN Class Forum

- 2 LFD213 Class Forum - Discontinued

- 1 LFD221 Class Forum

- 128 LFD232 Class Forum - Discontinued

- 3 LFD233 Class Forum - Discontinued

- 5 LFD237 Class Forum

- 25 LFD254 Class Forum

- 768 LFD259 Class Forum

- 111 LFD272 Class Forum - Discontinued

- 4 LFD272-JP クラス フォーラム - Discontinued

- 23 LFD273 Class Forum

- 571 LFS101 Class Forum

- 4 LFS111 Class Forum

- 4 LFS112 Class Forum

- LFS114 Class Forum

- 5 LFS116 Class Forum

- 9 LFS118 Class Forum

- 2 LFS120 Class Forum

- LFS140 Class Forum

- 12 LFS142 Class Forum

- 9 LFS144 Class Forum

- 6 LFS145 Class Forum

- 7 LFS146 Class Forum

- 7 LFS147 Class Forum

- 24 LFS148 Class Forum

- 21 LFS151 Class Forum

- 6 LFS157 Class Forum

- 106 LFS158 Class Forum

- 1 LFS158-JP クラス フォーラム

- 15 LFS162 Class Forum

- 2 LFS166 Class Forum - Discontinued

- 9 LFS167 Class Forum

- 5 LFS170 Class Forum

- 2 LFS171 Class Forum - Discontinued

- 4 LFS178 Class Forum - Discontinued

- 4 LFS180 Class Forum

- 3 LFS182 Class Forum

- 7 LFS183 Class Forum

- 2 LFS184 Class Forum

- 42 LFS200 Class Forum

- 737 LFS201 Class Forum - Discontinued

- 3 LFS201-JP クラス フォーラム - Discontinued

- 23 LFS203 Class Forum

- 150 LFS207 Class Forum

- 3 LFS207-DE-Klassenforum

- 3 LFS207-JP クラス フォーラム

- 302 LFS211 Class Forum - Discontinued

- 56 LFS216 Class Forum - Discontinued

- 61 LFS241 Class Forum

- 52 LFS242 Class Forum

- 41 LFS243 Class Forum

- 18 LFS244 Class Forum

- 8 LFS245 Class Forum

- 1 LFS246 Class Forum

- 1 LFS248 Class Forum

- 127 LFS250 Class Forum

- 3 LFS250-JP クラス フォーラム

- 2 LFS251 Class Forum - Discontinued

- 164 LFS253 Class Forum

- 1 LFS254 Class Forum - Discontinued

- 3 LFS255 Class Forum

- 18 LFS256 Class Forum

- 2 LFS257 Class Forum

- 1.4K LFS258 Class Forum

- 12 LFS258-JP クラス フォーラム

- 148 LFS260 Class Forum

- 165 LFS261 Class Forum

- 45 LFS262 Class Forum

- 82 LFS263 Class Forum - Discontinued

- 15 LFS264 Class Forum - Discontinued

- 11 LFS266 Class Forum - Discontinued

- 25 LFS267 Class Forum

- 28 LFS268 Class Forum

- 38 LFS269 Class Forum

- 11 LFS270 Class Forum

- 202 LFS272 Class Forum - Discontinued

- 2 LFS272-JP クラス フォーラム - Discontinued

- 2 LFS274 Class Forum - Discontinued

- 4 LFS281 Class Forum - Discontinued

- 32 LFW111 Class Forum

- 265 LFW211 Class Forum

- 190 LFW212 Class Forum

- 17 SKF100 Class Forum

- 2 SKF200 Class Forum

- 3 SKF201 Class Forum

- 804 Hardware

- 200 Drivers

- 68 I/O Devices

- 37 Monitors

- 104 Multimedia

- 175 Networking

- 93 Printers & Scanners

- 88 Storage

- 767 Linux Distributions

- 82 Debian

- 67 Fedora

- 21 Linux Mint

- 13 Mageia

- 23 openSUSE

- 150 Red Hat Enterprise

- 31 Slackware

- 13 SUSE Enterprise

- 356 Ubuntu

- 474 Linux System Administration

- 39 Cloud Computing

- 72 Command Line/Scripting

- Github systems admin projects

- 97 Linux Security

- 78 Network Management

- 102 System Management

- 48 Web Management

- 86 Mobile Computing

- 19 Android

- 54 Development

- 1.2K New to Linux

- 1K Getting Started with Linux

- 397 Off Topic

- 125 Introductions

- 183 Small Talk

- 28 Study Material

- 1K Programming and Development

- 317 Kernel Development

- 673 Software Development

- 1.9K Software

- 316 Applications

- 183 Command Line

- 5 Compiling/Installing

- 989 Games

- 321 Installation

- 117 All In Program

- 117 All In Forum

Upcoming Training

-

August 20, 2018

Kubernetes Administration (LFS458)

-

August 20, 2018

Linux System Administration (LFS301)

-

August 27, 2018

Open Source Virtualization (LFS462)

-

August 27, 2018

Linux Kernel Debugging and Security (LFD440)