Lab 3.2 Grow the Cluster - Cilium crashloop with worker nodes

The issue:

When adding workers, cilium pods are stuck in CarshLoopBackOff during the initialization process.

- Instructions from Lab 3.1 to Lab 3.2 followed to the letter.

- kubectl, kubeadm, kubelet 1.31.1 with apt maked to hold

Spect

- One computer with 3 VirtualBox machines (32GB RAM, 2TB Storage)

- Each node has 2cores, 4GB RAM, and 20GB Storage

Network

- NAT used to provide easy internet access, for wget and apt install.

- Host-only Adapter used to connect between Nodes. Promiscuous mode: "deny". Each machine can ping one another.

Network IPs

- Host-only Ethernet IPv4: 192.168.56.1 /24

- CP: 192.168.56.11/24

- Worker1: 192.168.56.21/24

- Worker2: 192.168.56.22/24

Cilium

cluster-pool-ipv4-cidr: 192.168.0.0 /16

What I tried

I was worried that Cilium might not be using k8scp, despit it being used in the init file.

cp > cat /etc/hosts

127.0.0.1 localhost

10.0.2.15 cp

192.168.56.11 k8scp

worker1 > cat /etc/hosts

127.0.0.1 localhost

10.0.2.15 worker1

192.168.56.11 k8scp cp

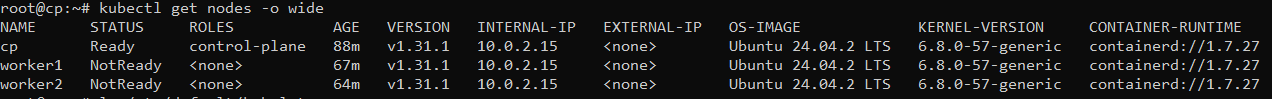

I notice the "internal IP" was linked to my NAT rather than the Host-Only Network.

So I solved it by modifying manually specifying which IP my kubelets should use.

sudo vim /etc/default/kubelet KUBELET_EXTRA_ARGS="--node-ip=192.168.56.11"

It now shows:

But my pods are still in CrashLoopBackOff...

I belive that I may need to set the default IP (eth0 or something) to my desired host-only adapter. Or maybe there is a cilium-specific config that I must change.

Any help is welcome. If you have a prefered/recommended VirtualBox setup, tell me. There was no recommendations on how to configure the VM networking in the course, so I followed standard home-lab practices.

Best Answer

-

Hi @fasmy,

As a follow up to your earlier post, this paints a much clearer picture of your infrastructure and your Kubernetes installation.

- Each node has 2cores, 4GB RAM, and 20GB Storage

I would recommend at least the cp VM provisioned with 8 GB RAM, while the two workers should support the light workload of the lab exercises at 4GB RAM each (pay close attention at exercises 4.2 and 4.3 while working with Memory constraints).

- NAT used to provide easy internet access, for wget and apt install.

- Host-only Adapter used to connect between Nodes...

While a mix of nat and host-only networks may seem to satisfy "standard home-lab practices", a single bridged network adapter per VM has worked very well for VirtualBox VMs for the purposes of this lab environment. The bridged network adapter simultaneously supports the network modes of the nat and host-only adapters.

... Promiscuous mode: "deny"...

Promiscuous mode should be set to "allow-all" traffic to the VMs, even with the recommended bridged adapter.

... Each machine can ping one another.

Ping only proves that the ICMP protocol is allowed - a protocol not used by Kubernetes. Instead, Kubernetes relies on TCP and UDP protocols.

Host-only Ethernet IPv4: 192.168.56.1 /24

CP: 192.168.56.11/24

Worker1: 192.168.56.21/24

Worker2: 192.168.56.22/24

cluster-pool-ipv4-cidr: 192.168.0.0 /16Overlapping VM IP addresses with the pod IP pool is detrimental to routing within the cluster. By default, VirtualBox uses the 192.168.56.0/24 IP range for VMs. The pod IP pool (range) should be distinct, not overlapping the VM range. In addition to setting one single bridged adapter per VM, and enabling promiscuous mode to "allow-all", I recommend setting the

cluster-pool-ipv4-cidrto10.200.0.0/16incilium-cni.yamlmanifest, and modifying thepodSubnetentry to the same10.200.0.0/16cidr in thekubeadm-config.yamlmanifest.Regards,

-Chris0

Answers

-

Thank you very much !

I'll keep you in touch. I might be off work soon enough to fix it tomorrow!

0 -

It works, it just works! HOORAY!

I tried tweaking the IP/CIDR ranges to get things tight—around 60 nodes with 128 IPs each (I really can't remember exactly). But in the end, I stopped playing games and just went with a bridge setup.

OH MY BRIDGE!

I was even able to reuse my existing virtual machines—I DIDN’T HAVE TO START FROM SCRATCH!

Quick advice for anyone trying to learn from the ground up and doing it with non-standard setup:

Keep snapshots at every step!

After SSH setup

After all Kubernetes packages are installed, /etc/hostname and /etc/hosts are set, and swapoff -a is done

→ From here, clone your first machine (named cp, for control plane) and clone it twice—once for each worker.

2.1. On each worker, set /etc/hostname and /etc/hosts, then save a snapshotAfter cp init (verify everything is OK with kubectl get -A pods)

→ Don’t forget: kubectl needs to be configured to work. The kubeadm init output literally gives you 4 commands—run them!After Cilium is installed on the cp (check with kubectl get -A pods)

→ One control plane pod will be stuck at 0/1 until a second node joins—Next Step!After both worker nodes have joined

→ Check kubectl get -A pods again and make sure everything shows 1/1 and looks clean!

AND YOU ARE D-D-D-D-D-D-DONE!

If you're following a strict tutorial, you might get through this in a few minutes and wonder why it ever seems hard. But if you're not an everyday Linux user, you’ll need to learn how to tweak configs (Ubuntu uses Netplan now), set up SSH (both server and client), and secure access properly.

SIMULATE your full working environment—from start to finish.

Break things on purpose. Try weird setups. Learn what fails and why.Referential learning is the backbone of my job, but some things you really have to do once before you can just follow instructions blindly.

To whoever’s reading this: good luck!

And yes—follow the tutorial strictly if you don't want to waste time. I had to do this, because I have great bare metal pans for the future, so there was no shortcut.0

Categories

- All Categories

- 161 LFX Mentorship

- 161 LFX Mentorship: Linux Kernel

- 890 Linux Foundation IT Professional Programs

- 396 Cloud Engineer IT Professional Program

- 195 Advanced Cloud Engineer IT Professional Program

- 102 DevOps IT Professional Program

- 1 DevOps & GitOps IT Professional Program

- 165 Cloud Native Developer IT Professional Program

- 158 Express Training Courses & Microlearning

- 155 Express Courses - Discussion Forum

- 3 Microlearning - Discussion Forum

- 7.4K Training Courses

- 50 LFC110 Class Forum - Discontinued

- 74 LFC131 Class Forum - DISCONTINUED

- 60 LFD102 Class Forum

- 276 LFD103 Class Forum

- 1 LFD103-JP クラス フォーラム

- 31 LFD110 Class Forum

- LFD114 Class Forum

- 55 LFD121 Class Forum

- 3 LFD123 Class Forum

- 1 LFD125 Class Forum

- 19 LFD133 Class Forum

- 10 LFD134 Class Forum

- 19 LFD137 Class Forum

- 1 LFD140 Class Forum

- 73 LFD201 Class Forum

- 8 LFD210 Class Forum

- 6 LFD210-CN Class Forum

- 2 LFD213 Class Forum - Discontinued

- 1 LFD221 Class Forum

- 128 LFD232 Class Forum - Discontinued

- 3 LFD233 Class Forum - Discontinued

- 5 LFD237 Class Forum

- 25 LFD254 Class Forum

- 768 LFD259 Class Forum

- 111 LFD272 Class Forum - Discontinued

- 4 LFD272-JP クラス フォーラム - Discontinued

- 23 LFD273 Class Forum

- 572 LFS101 Class Forum

- 4 LFS111 Class Forum

- 4 LFS112 Class Forum

- LFS114 Class Forum

- 5 LFS116 Class Forum

- 9 LFS118 Class Forum

- 2 LFS120 Class Forum

- LFS140 Class Forum

- 12 LFS142 Class Forum

- 9 LFS144 Class Forum

- 6 LFS145 Class Forum

- 7 LFS146 Class Forum

- 7 LFS147 Class Forum

- 24 LFS148 Class Forum

- 21 LFS151 Class Forum

- 6 LFS157 Class Forum

- 106 LFS158 Class Forum

- 1 LFS158-JP クラス フォーラム

- 15 LFS162 Class Forum

- 2 LFS166 Class Forum - Discontinued

- 9 LFS167 Class Forum

- 5 LFS170 Class Forum

- 2 LFS171 Class Forum - Discontinued

- 4 LFS178 Class Forum - Discontinued

- 4 LFS180 Class Forum

- 3 LFS182 Class Forum

- 7 LFS183 Class Forum

- 2 LFS184 Class Forum

- 42 LFS200 Class Forum

- 737 LFS201 Class Forum - Discontinued

- 3 LFS201-JP クラス フォーラム - Discontinued

- 23 LFS203 Class Forum

- 150 LFS207 Class Forum

- 3 LFS207-DE-Klassenforum

- 3 LFS207-JP クラス フォーラム

- 302 LFS211 Class Forum - Discontinued

- 56 LFS216 Class Forum - Discontinued

- 61 LFS241 Class Forum

- 52 LFS242 Class Forum

- 41 LFS243 Class Forum

- 18 LFS244 Class Forum

- 8 LFS245 Class Forum

- 1 LFS246 Class Forum

- 1 LFS248 Class Forum

- 127 LFS250 Class Forum

- 3 LFS250-JP クラス フォーラム

- 2 LFS251 Class Forum - Discontinued

- 164 LFS253 Class Forum

- 1 LFS254 Class Forum - Discontinued

- 3 LFS255 Class Forum

- 18 LFS256 Class Forum

- 2 LFS257 Class Forum

- 1.4K LFS258 Class Forum

- 12 LFS258-JP クラス フォーラム

- 148 LFS260 Class Forum

- 165 LFS261 Class Forum

- 45 LFS262 Class Forum

- 82 LFS263 Class Forum - Discontinued

- 15 LFS264 Class Forum - Discontinued

- 11 LFS266 Class Forum - Discontinued

- 25 LFS267 Class Forum

- 28 LFS268 Class Forum

- 38 LFS269 Class Forum

- 11 LFS270 Class Forum

- 202 LFS272 Class Forum - Discontinued

- 2 LFS272-JP クラス フォーラム - Discontinued

- 2 LFS274 Class Forum - Discontinued

- 4 LFS281 Class Forum - Discontinued

- 32 LFW111 Class Forum

- 265 LFW211 Class Forum

- 190 LFW212 Class Forum

- 17 SKF100 Class Forum

- 2 SKF200 Class Forum

- 3 SKF201 Class Forum

- 804 Hardware

- 200 Drivers

- 68 I/O Devices

- 37 Monitors

- 104 Multimedia

- 175 Networking

- 93 Printers & Scanners

- 88 Storage

- 767 Linux Distributions

- 82 Debian

- 67 Fedora

- 21 Linux Mint

- 13 Mageia

- 23 openSUSE

- 150 Red Hat Enterprise

- 31 Slackware

- 13 SUSE Enterprise

- 356 Ubuntu

- 474 Linux System Administration

- 39 Cloud Computing

- 72 Command Line/Scripting

- Github systems admin projects

- 97 Linux Security

- 78 Network Management

- 102 System Management

- 48 Web Management

- 86 Mobile Computing

- 19 Android

- 54 Development

- 1.2K New to Linux

- 1K Getting Started with Linux

- 397 Off Topic

- 125 Introductions

- 183 Small Talk

- 28 Study Material

- 1K Programming and Development

- 317 Kernel Development

- 673 Software Development

- 1.9K Software

- 316 Applications

- 183 Command Line

- 5 Compiling/Installing

- 989 Games

- 321 Installation

- 117 All In Program

- 117 All In Forum

Upcoming Training

-

August 20, 2018

Kubernetes Administration (LFS458)

-

August 20, 2018

Linux System Administration (LFS301)

-

August 27, 2018

Open Source Virtualization (LFS462)

-

August 27, 2018

Linux Kernel Debugging and Security (LFD440)