Lab 3.2 Section 15

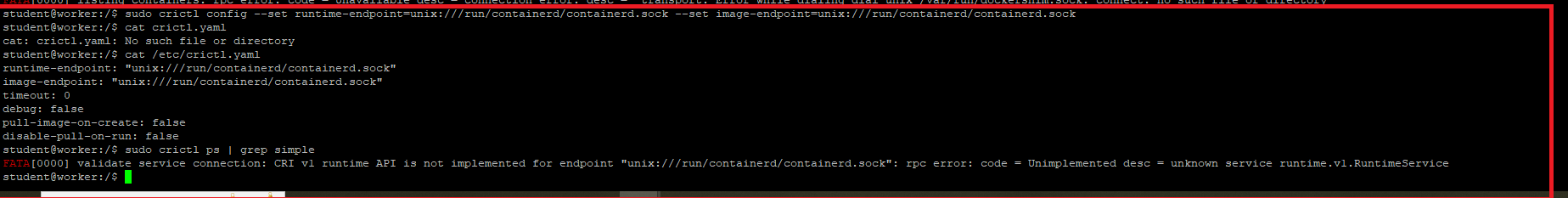

I am stuck on Section 15 of Lab 3.2. To verify if Simpleapp is running on second node using "sudo crictl ps". I first set up the crictl config by mistake. However I am getting the following error.

I know in cp I have 6 instance simpleapp deployed to, so I am not sure what this error means. "FATA[0000] validate service connection: CRI v1 runtime API is not implemented for endpoint "unix:///run/containerd/containerd.sock": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService"

Best Answer

-

Hi @kenneth.kon001,

After a quick sanity testing I can confirm that the custom containerd config is preserved across several cp and worker node reboots. This assumes the custom config is applied only once, following the recommended sequence, after the local-repo-setup.sh has been executed only once. No other edits of config.toml are needed - even after several reboots - the custom entries are preserved.

Per my earlier edit, the repo/simpleapp image needs to be pushed again into the local registry (step 12 command #2), because the registry catalog is cleaned by the reboot. Without repopulating the registry catalog, the try1 Deployment's replicas will show ErrImagePull and CrashLoopBackOff status. After the image push, that populates the registry, the try1 replicas will eventually retrieve the image and reach the Running state.

Regards,

-Chris0

Answers

-

Hi @kenneth.kon001,

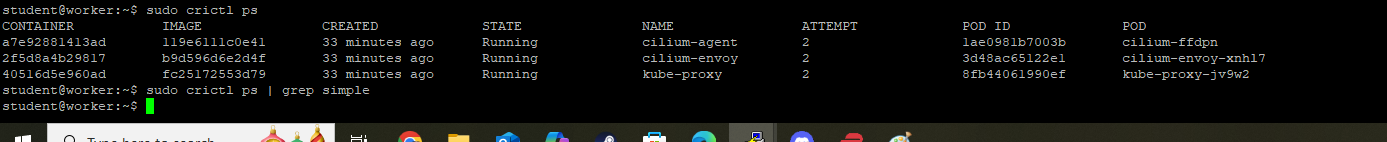

Your worker does not seem to host active workload. It is the same issue as before - misconfigured containerd runtime. Per the earlier discussion, apply the same solution on the worker node as well, to configure the containerd runtime, which then enables the crictl CLI.

Regards,

-Chris0 -

Weird, I thought I ran the process on Worker node. Let me try that. @chrispokorni I appreciate you messaging me back.

0 -

Alright, this is what I thought. I did follow the steps you mentioned. However when I do sudo reboot. It resets the config.toml. So I have to repeat the step.

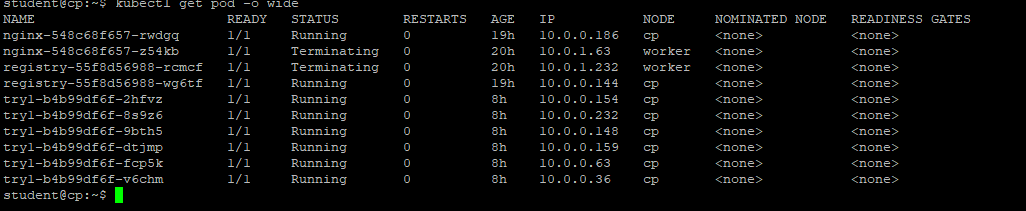

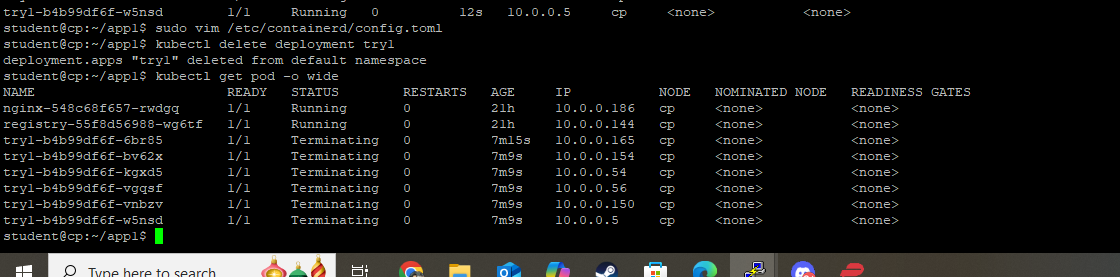

So I am running into problem, I can run "sudo crictl ps" however now I am not seeing the simpleapp container within the worker node. When I created the try deploying simpleapp in cp "kubectl create deployment try1 --image=$repo/simpleapp" the node its created with says cp instead of worker. Do you know what might be causing this issue?

0

0 -

Hi @kenneth.kon001,

Your try1 Deployment's pod replicas are all hosted by the control plane node as a result of the scheduling process. I would expect some, but not necessarily all, of these six replicas to be scheduled onto the worker node also. If they are not scheduled onto the worker node may indicate an unreachable, unschedulable, or unhealthy worker node. Also, the "Terminating" status of all six replicas is not desired.

From your output, it seems your worker node is only hosting the three networking infrastructure pods. What is the state of your cluster overall? What output is produced by:

kubectl get nodes -o wide kubectl get pods -A -o wide

I will take another look at the containerd configuration solution and check it against node reboots.

EDIT: The registry catalog is expected to be empty after a reboot. So a new simpleapp image push is necessary after a reboot in order to launch the try1 Deployment.

Regards,

-Chris0 -

Hi @chrispokorni,

Pushing the repo/simpleapp image into local registry did the trick. Thank you for the help. Hopefully I do not run into anymore weird bugs.

0

Categories

- All Categories

- 162 LFX Mentorship

- 162 LFX Mentorship: Linux Kernel

- 892 Linux Foundation IT Professional Programs

- 396 Cloud Engineer IT Professional Program

- 195 Advanced Cloud Engineer IT Professional Program

- 104 DevOps IT Professional Program

- 1 DevOps & GitOps IT Professional Program

- 165 Cloud Native Developer IT Professional Program

- 158 Express Training Courses & Microlearning

- 155 Express Courses - Discussion Forum

- 3 Microlearning - Discussion Forum

- 7.4K Training Courses

- 50 LFC110 Class Forum - Discontinued

- 74 LFC131 Class Forum - DISCONTINUED

- 60 LFD102 Class Forum

- 276 LFD103 Class Forum

- 1 LFD103-JP クラス フォーラム

- 32 LFD110 Class Forum

- LFD114 Class Forum

- 55 LFD121 Class Forum

- 3 LFD123 Class Forum

- 1 LFD125 Class Forum

- 19 LFD133 Class Forum

- 10 LFD134 Class Forum

- 19 LFD137 Class Forum

- 1 LFD140 Class Forum

- 73 LFD201 Class Forum

- 8 LFD210 Class Forum

- 6 LFD210-CN Class Forum

- 2 LFD213 Class Forum - Discontinued

- 1 LFD221 Class Forum

- 128 LFD232 Class Forum - Discontinued

- 3 LFD233 Class Forum - Discontinued

- 5 LFD237 Class Forum

- 25 LFD254 Class Forum

- 768 LFD259 Class Forum

- 111 LFD272 Class Forum - Discontinued

- 4 LFD272-JP クラス フォーラム - Discontinued

- 23 LFD273 Class Forum

- 574 LFS101 Class Forum

- 4 LFS111 Class Forum

- 4 LFS112 Class Forum

- LFS114 Class Forum

- 5 LFS116 Class Forum

- 9 LFS118 Class Forum

- 2 LFS120 Class Forum

- LFS140 Class Forum

- 12 LFS142 Class Forum

- 9 LFS144 Class Forum

- 6 LFS145 Class Forum

- 7 LFS146 Class Forum

- 7 LFS147 Class Forum

- 24 LFS148 Class Forum

- 21 LFS151 Class Forum

- 6 LFS157 Class Forum

- 106 LFS158 Class Forum

- 1 LFS158-JP クラス フォーラム

- 15 LFS162 Class Forum

- 2 LFS166 Class Forum - Discontinued

- 9 LFS167 Class Forum

- 5 LFS170 Class Forum

- 2 LFS171 Class Forum - Discontinued

- 4 LFS178 Class Forum - Discontinued

- 4 LFS180 Class Forum

- 3 LFS182 Class Forum

- 7 LFS183 Class Forum

- 2 LFS184 Class Forum

- 42 LFS200 Class Forum

- 737 LFS201 Class Forum - Discontinued

- 3 LFS201-JP クラス フォーラム - Discontinued

- 23 LFS203 Class Forum

- 150 LFS207 Class Forum

- 3 LFS207-DE-Klassenforum

- 3 LFS207-JP クラス フォーラム

- 302 LFS211 Class Forum - Discontinued

- 56 LFS216 Class Forum - Discontinued

- 61 LFS241 Class Forum

- 52 LFS242 Class Forum

- 41 LFS243 Class Forum

- 18 LFS244 Class Forum

- 8 LFS245 Class Forum

- 1 LFS246 Class Forum

- 1 LFS248 Class Forum

- 127 LFS250 Class Forum

- 3 LFS250-JP クラス フォーラム

- 2 LFS251 Class Forum - Discontinued

- 164 LFS253 Class Forum

- 1 LFS254 Class Forum - Discontinued

- 3 LFS255 Class Forum

- 18 LFS256 Class Forum

- 2 LFS257 Class Forum

- 1.4K LFS258 Class Forum

- 12 LFS258-JP クラス フォーラム

- 148 LFS260 Class Forum

- 165 LFS261 Class Forum

- 45 LFS262 Class Forum

- 82 LFS263 Class Forum - Discontinued

- 15 LFS264 Class Forum - Discontinued

- 11 LFS266 Class Forum - Discontinued

- 25 LFS267 Class Forum

- 28 LFS268 Class Forum

- 38 LFS269 Class Forum

- 11 LFS270 Class Forum

- 202 LFS272 Class Forum - Discontinued

- 2 LFS272-JP クラス フォーラム - Discontinued

- 2 LFS274 Class Forum - Discontinued

- 4 LFS281 Class Forum - Discontinued

- 32 LFW111 Class Forum

- 265 LFW211 Class Forum

- 190 LFW212 Class Forum

- 17 SKF100 Class Forum

- 2 SKF200 Class Forum

- 3 SKF201 Class Forum

- 804 Hardware

- 200 Drivers

- 68 I/O Devices

- 37 Monitors

- 104 Multimedia

- 175 Networking

- 93 Printers & Scanners

- 88 Storage

- 767 Linux Distributions

- 82 Debian

- 67 Fedora

- 21 Linux Mint

- 13 Mageia

- 23 openSUSE

- 150 Red Hat Enterprise

- 31 Slackware

- 13 SUSE Enterprise

- 356 Ubuntu

- 474 Linux System Administration

- 39 Cloud Computing

- 72 Command Line/Scripting

- Github systems admin projects

- 97 Linux Security

- 78 Network Management

- 102 System Management

- 48 Web Management

- 87 Mobile Computing

- 19 Android

- 55 Development

- 1.2K New to Linux

- 1K Getting Started with Linux

- 399 Off Topic

- 125 Introductions

- 185 Small Talk

- 28 Study Material

- 1K Programming and Development

- 317 Kernel Development

- 677 Software Development

- 1.9K Software

- 317 Applications

- 183 Command Line

- 5 Compiling/Installing

- 989 Games

- 321 Installation

- 118 All In Program

- 118 All In Forum

Upcoming Training

-

August 20, 2018

Kubernetes Administration (LFS458)

-

August 20, 2018

Linux System Administration (LFS301)

-

August 27, 2018

Open Source Virtualization (LFS462)

-

August 27, 2018

Linux Kernel Debugging and Security (LFD440)